RAID, ZFS, and MDADM: Understanding Different Storage Solutions

My goal is to explore aspects like ease of management, hardware requirements, CPU usage during IO-heavy tasks, performance in different scenarios, and rebuild times. There are many interesting things to cover when setting up RAID arrays and other ways of achieving redundancy, so let's dive in.

NEWS

RAID, ZFS, and MDADM: Understanding Different Storage Solutions

It's generally recommended to use the software RAID to manage multiple disks into a single large array with redundancy. However, in this article, I want to take a closer look at hardware RAID solutions, how they function, and how they compare to software-based solutions like ZFS and MDADM.

My goal is to explore aspects like ease of management, hardware requirements, CPU usage during IO-heavy tasks, performance in different scenarios, and rebuild times. There are many interesting things to cover when setting up RAID arrays and other ways of achieving redundancy, so let's dive in.

Hardware vs. Software RAID: The Basics

When looking at different RAID setups, the first thing I want to do is break down what I consider to be hardware RAID and software RAID, and provide a general overview of how they work.

One of the most traditional examples of hardware RAID is a Dell H710P RAID controller. This card has its own dedicated chipset to handle all the RAID calculations, meaning that the system's CPU doesn't have to do any extra work. It also has a gigabyte of onboard memory, so it can cache write operations and hold them temporarily before storing them on the physical disks. To keep that data safe, it includes a battery backup unit (BBU), which allows the controller to retain cached data even if power is lost. Once the system is powered back on, it can safely complete the pending write operations.

Software RAID, on the other hand, works completely differently. Instead of having a dedicated RAID controller that hides the underlying disk structure, software RAID solutions expose all individual drives to the operating system. The software then manages data distribution across those disks.

I'm focusing on ZFS and MDADM for this article because they are the most common Linux-based RAID alternatives to hardware RAID controllers. But there are plenty of others, like Btrfs, Windows Storage Spaces, Unraid, and more. They all aim to combine multiple disks into a single volume while providing redundancy options.

Hardware RAID: Pros and Cons

One of the main advantages of hardware RAID is that it simplifies things for the operating system. The OS just sees a single drive, rather than a collection of separate disks. That means any operating system—Windows, Linux, BSD—can work with a RAID array managed by a hardware RAID card.

However, there are drawbacks too. Hardware RAID requires an actual RAID controller card, which isn't included in most consumer-grade systems and adds extra cost. You'll also need a PCIe x8 slot, and all the drives must be connected directly to the RAID card.

Another thing to consider is the battery backup. These batteries wear out over time, and once they degrade, your RAID controller can no longer cache writes properly, which significantly affects performance. I'll talk more about this later when looking at write speeds.

Software RAID: Pros and Cons

Software RAID is much more flexible when it comes to hardware. As long as the drives are visible to the operating system, they can be part of a software RAID array. You could even mix different types of storage—SATA, NVMe, or even networked iSCSI drives (though that's generally not recommended).

Another huge advantage of software RAID is portability. If your system crashes or you want to move your drives to a new system, all you need is the appropriate software (MDADM, ZFS, etc.), and the array can usually be detected and reassembled without issues.

Hardware RAID, on the other hand, locks you into specific RAID controllers. If your RAID card fails, you usually need the same model (or at least the same manufacturer) to access your data again.

Managing RAID: Pre-Boot Environments and OS Utilities

Hardware RAID cards often come with a pre-boot environment, allowing you to configure the array before the OS even loads. You can set up arrays, delete arrays, and check disk status from there. But, from my experience, these tools can be picky.

For example, server-grade motherboards tend to work fine with RAID cards, but consumer-grade boards (like gaming motherboards) sometimes don't even recognize the RAID card properly.

If the pre-boot utility doesn't work, RAID cards usually have OS utilities for management. However, these utilities aren't updated very often. While Debian 12 might support your RAID card's utility today, Debian 18 might not, making long-term support a concern.

Software RAID solutions like MDADM and ZFS avoid this issue because they are actively maintained and will continue to work across future Linux versions.

Performance, Rebuild Times, and CPU Usage

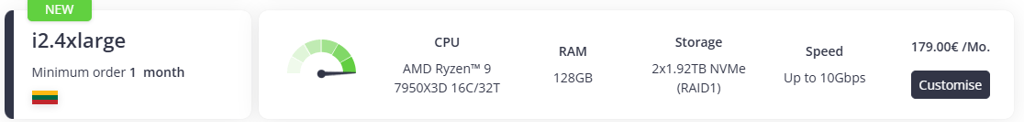

For benchmarking, I set up eight 4TB WD Red Plus hard drives in a RAID 6 array. RAID 6 is one of the worst cases for CPU usage since it requires complex parity calculations, so it's a great way to test performance. The RAID Calculator can check disk redundancy and available storage space.

Sequential Read/Write Performance

Hardware RAID performed the best, delivering close to theoretical maximum speeds for RAID 6.

ZFS was slightly behind hardware RAID but still solid.

MDADM was noticeably slower, especially in writing.

I tried tuning MDADM (adjusting stripe cache size, enabling direct mode, etc.), but I couldn't get it to perform much better. My best guess is that MDADM's write hole problem affects performance more than I expected.

The RAID Write Hole Problem

RAID arrays often face the write hole problem, where incomplete writes during power loss leave the array in an inconsistent state.

Hardware RAID fixes this with battery-backed cache, allowing it to store writes safely.

ZFS handles this differently using copy-on-write, meaning it never modifies existing data—it just writes a new copy.

MDADM uses journaling, which is effective but relies on the slow disks rather than fast onboard cache, which likely explains the slower write speeds.

RAID Rebuild Times

ZFS won this test easily. Since ZFS only rebuilds the actual data, not the entire drive, recovery times were much faster.

Hardware RAID was faster than MDADM but still had to rebuild the entire disk.

MDADM was the slowest of the three.

CPU Usage During RAID Operations

Hardware RAID had the lowest CPU usage by far, which is expected since the RAID controller handles all calculations. Software RAID (MDADM and ZFS) did use some CPU, but even on an old dual-Opteron system, it wasn't enough to cause actual performance issues.

Final Thoughts: What Should You Use?

After spending a week running tests, I found that software RAID is generally the better choice for home servers due to flexibility, ease of management, and long-term compatibility.

However, if you already have a server with a RAID card, there's no reason not to use it. Performance-wise, hardware RAID still wins in many cases, but the dependency on a specific RAID controller is a big drawback.

For users who want the best all-in-one solution, ZFS is probably the smartest choice. It combines RAID, volume management, snapshots, and self-healing into one system. But, if you need cross-OS support (like a dual-boot Windows/Linux setup), hardware RAID is sometimes the only option.

There's no perfect RAID solution—each has trade-offs depending on your specific needs.